This article is about the workshop “Adding Computer Vision to Robot Operating System” as presented at the Python Ireland, PyCon Limerick 2020 event. The main points of the workshop will be sumarised here. The workshop material including Virtual Machines and source code etc are linked on this page.

The objectives of the workshop were :

- To provide a functioning ROS environment with everything tested and working together.

- To demonstrate 2 nodes communicating in a ROS environment in a realistic way.

- To demonstrate and explain how to use object detection in a way that could be expanded upon by the attendee for their own projects.

- To provide many useful links and resources to allow the attendee to continue learning if they so wished.

What is ROS?

ROS is basically a messaging system that allows multiple sensors and programs to communicate with each other. It could be described as middle-ware rather than an operating system. ROS was born out of the need for university researchers to stop reinventing basic robotic functionality for every robotic project they worked on. This allowed them to focus on more important aspects of robotic system design. Many groups of researchers had the same needs and so ROS is now an emerging standard in the field of Robotics research. From this base ROS is expanding into commercial and industrial applications as a universal Robotic standard. A milestone in the development was when Sebastian Thun developed a full self driving car using ROS.

Environment Setup

ROS only runs on UNIX/Linux. A typical setup may have many extra dependencies. This workshop required, ROS on Ubuntu Linux, with Tensorflow for Object Detection, OpenCV for image manipulation and a Test to Speech API with various other Python dependencies such as Numpy etc. Furthermore everything must be tested to ensure everything works together before starting. To avoid problems a Virtual Machine was distributed with the environment tested and working, all the source code ready for use in Virtual Studio Code, slides and resources saved on the VM and some useful links in the web browser for reference. There are tutorials on the ROS website these are useful for learning but also for testing the setup to ensure it works. The files and folders from the ROS tutorials are in place on the virtual machine to create a usable tested environment and a starting point for the workshop. The Virtual machine and workshop slides are available here on a shared Google Drive. Be advised the folder is over 4 Gb in size. Import the VM into Oracle Virtualbox with the command “Import Appliance”. The username is “pycon” and the password is “pycon”.

Basic Concepts of Operation

A ROS system is made up of nodes each node is a program or script capable of running independently.Nodes communicate using messages. There are many standard types and new types can be created. Messages can be posted to topics, similar conceptually to a person posting a note on a physical notice board rather than directly speaking to someone. A node that is posting a message to a topic is a publisher. A node that is waiting for messages on a topic is called a subscriber to a topic. A node can be both a publisher and a subscriber to multiple topics at the same time.

"Speak.py" - Text to Speech

To demonstrate nodes communicating a node called speak.py was created. This node listened to a topic that another node was publishing to. Initially a basic node sending a message “Hello World” was used but then a much more sophisticated node was used to publish objects detected in an image. The messages were deciphered against a list of object classification numbers and their descriptions. Eg if the message coming in was “53” this was checked against the list to find “53” corresponded to “Orange” and the word “Orange” was read out by the node. The list of objects was from the dataset known as COCO and contains 80 common objects from traffic lights to bananas. In this way the node could read aloud 80 different objects that might be sent by the other node.

"Finder.py" - Object detection

A node called finder.py was used to demonstrate object detection being run on random images and to create a message to send the other node. This was to represent what might happen in a realistic scenario where object detection might be run on images from a camera at up to 25 frames a second with the object classifier looking for people, cars or road signs in the images etc. In principal there is not much difference between using image detection on one image or many images so the workshop focused on how to process one image, extract useful information and send an appropriate message.

The object detection process was the subject of several questions and a detailed section by section explanation of the code was given. The object detection was run using a pretrained classifier using Tensorflow. The outputs were a collection of detected objects, a number to represent how confident the classifier was about each object and coordinates to provide for a bounding box to be drawn around the detected object. For simplicity only the object with the highest level of confidence was selected from each image. A bounding box was drawn around this object to clearly illustrate what the object detection was picking out. The number corresponding to this object was put into a message and published for the other node to decipher and speak aloud.

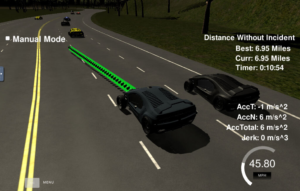

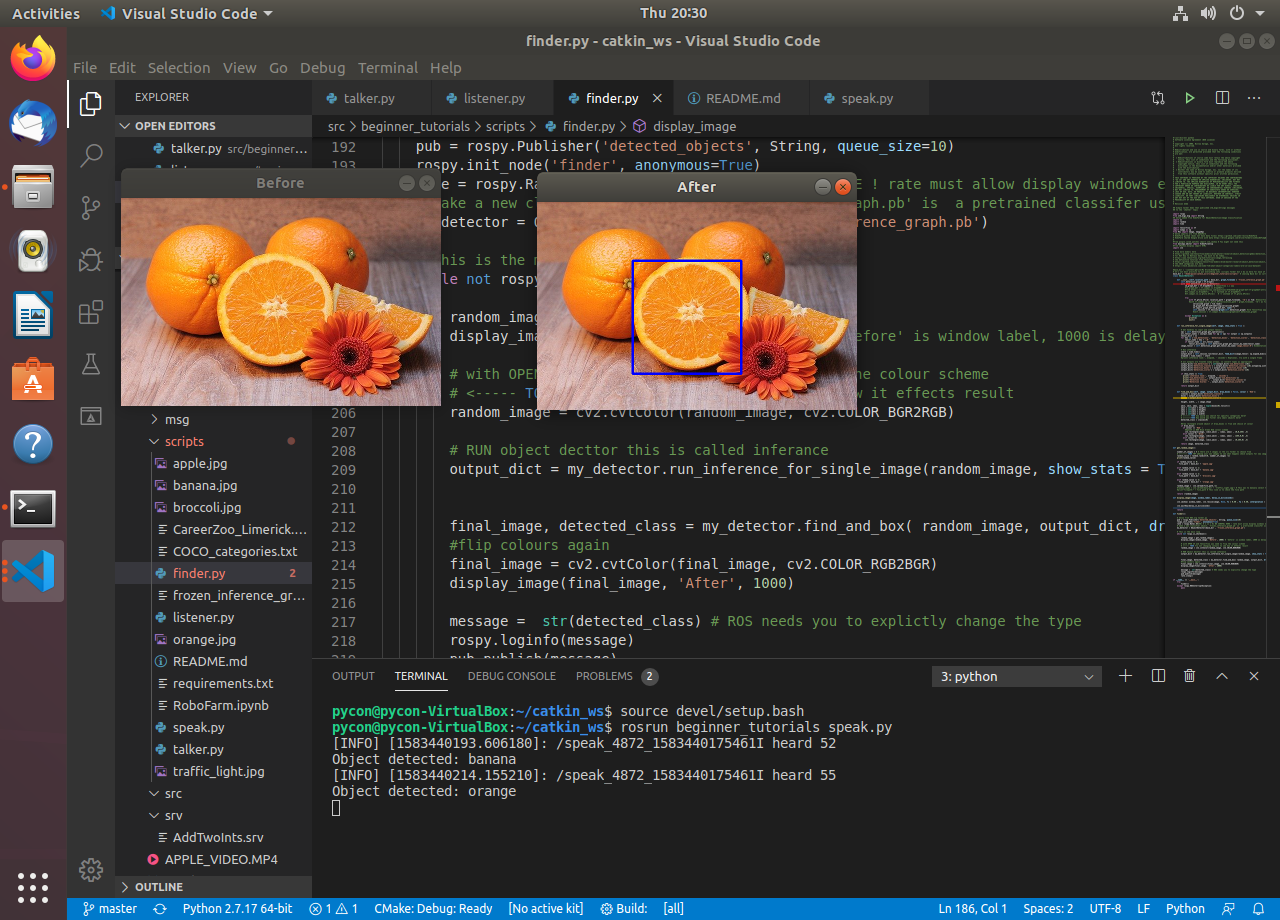

The Program running

In the above screenshot the Ubuntu Virtual Machine is shown running ROS through Visual Studio Code. The terminal output in the lower part of the screen shows the output “Object Detected: orange” . This is the screen output from speak.py. There is also an audio output where the word “orange” is spoken aloud. The two images displayed are before and after the object detection/image classification process. Here the original image is shown and the orange that was selected is marked with a bounding box.

You can download the Virtual Machine here from a shared Google drive here. You can see instructions for running the program on the GitHub page linked here.

Important Commands

ROScore : This is a program that must be running in the background the whole time you are running the ROS system. Open a terminal and type “roscore” and just let it run.

source devel/setup.bash : This command need to be typed into every new terminal to ensure the ROS in that terminal can see everything it need to run.

Catkin_make : This is similar in concept to CMAKE. This command is used after changes are made and you need to “recompile” the project.

ROSrun your_script : To execute a script or node in ROS type ROSRUN my_script.py