Capstone Project

Programming A Real Self Driving Car

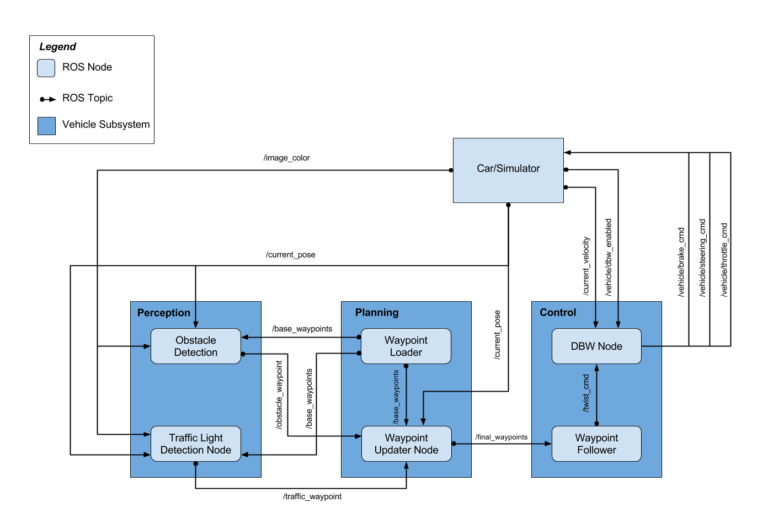

The Capstone project is where after 9 months of online lessons and projects we finally get to write code for a real self driving car. This project is big, so big it’s a team project. That brings a new set of problems. I had to form and lead a team in 4 different countries in 3 different time zones. Communication with Slack, videos calls with Zoom and coordination of multiple branches of code with GitHub became indispensable. The basic structure of the project is based on something called ROS (Robot Operating System). This allows different component parts to communicate, for example information from the camera goes to one part that in turn tells another part how to steer the car. Python is the main language used but some parts are in C++ for speed.

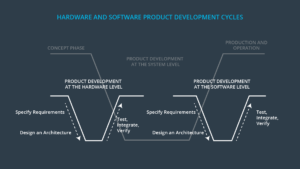

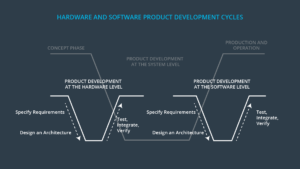

The Udacity instructors present an overview of the project and some advice on how to proceed. The project is organised in 3 sections. The first “Perception”, takes in images from a video camera, finds traffic lights and determining what colour they are. The second part “Planning”, takes in the status of traffic lights and adds that information to a series of way-points it is following. This is like following a GPS, you know the route but you still have to figure out where and when to stop at traffic lights. Gradually speeding up, slowing down and breaking are also done in this section. The last section “Control”, is for steering the car and following the planned way-points. Engaging and disengaging the system is handled here so the driver can take over the vehicle if necessary in a manual override. Finally in addition to the main sections are the messages going between each section as they communicate to each other. Messaging is managed by ROS as is the logging process whereby you can get the ROS to display on the screen or record on file what is happening for later review. These are vital for troubleshooting.

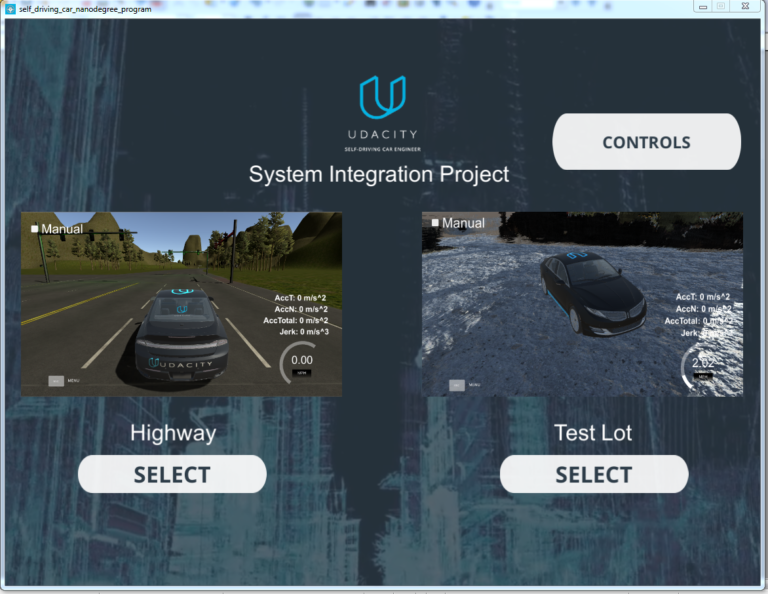

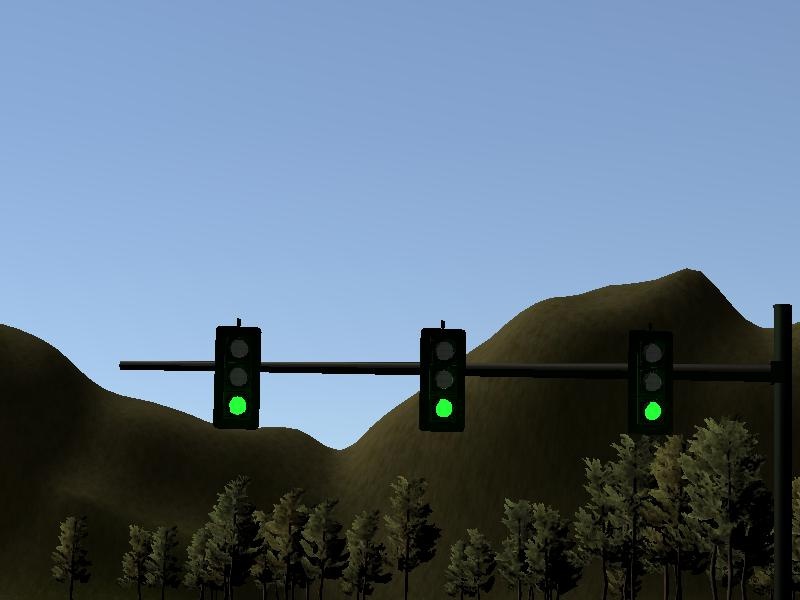

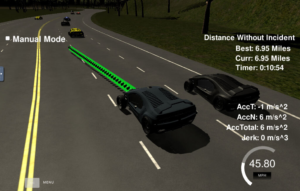

Udacity provide some amazing tools to support students. There is a complete online programming environment that you can use including a simulator to test your code as shown. You can also download the simulator and run it on your own machine if you have a good graphic card. Most people use the online work space for convenience but also most laptops even new ones would not have a good enough graphic card for this project so the online platform is very important.

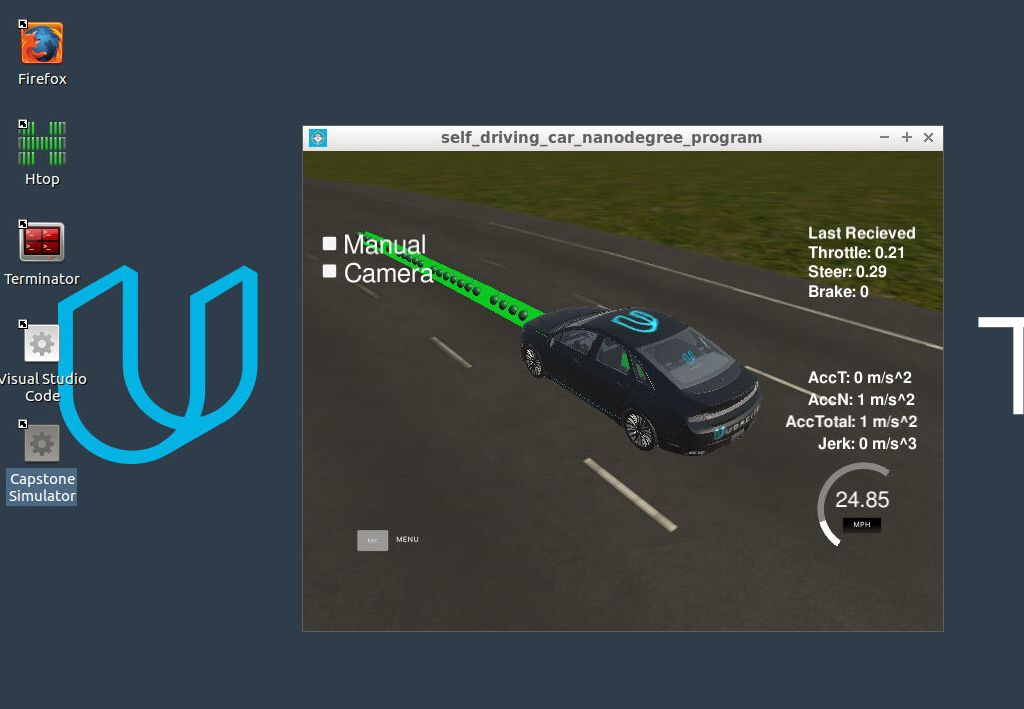

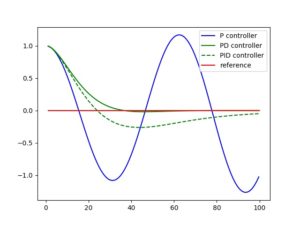

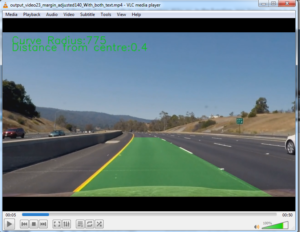

Here you can see some green dots ahead of the car. These are way-points, they are similar to how a car might follow a GPS route. They are useful for steering but only part of the solution. We need to take into account traffic light etc and also make sure we speed up and slow down gently. We also need to return gradually to the route if we go off route for a while. So if we go into manual mode and change lane then go back to automatic we need to gently return to the route without a jarring sideways motion, We created mathematical functions to smoothly steer and adjust speeds for all of this. We used something called a PID controller to help with this and a lot of experimentation went into this to give nice smooth steering and speed changes.

You may have heard terms like Artificial Intelligence or Machine Learning. What exactly that means is a bit hard to explain but essentially various companies have produced programming systems that can find and learn patterns in information. In our case we used something called Tensorflow from Google. This is a library of tools that can be used to find objects in images. In our project we used a 2 step process. The first step used a Tensorflow object detection process. (called ssd_mobilenet_v1_ppn_coco if you really want to know) . This was able to find the traffic lights and extract them from the video feed one image at a time. The smaller images of traffic lights were then fed into a second stage where the another machine learning part decided what color the traffic light was. The complete process was very technical and will have to be the subject of another article as it is too long to explain here.

However one aspect that is important and easy to explain is the idea of training. Every system of pattern recognition or machine learning needs to be trained on sample data to teach the program what to look for. In order for this learning to be done well you need as many test images as possible. In our case we made special test images from the simulator. We added a function to our program that would generate the images. During normal use this function was disabled. In the above images you can see that we generate over 6000 files for this purpose. In addition we used ready made images from a data set called the BOSCH data set with many thousands more. We also used more sample images from some sample data of a real self driving car going around the test track. This is one of the things about machine learning. You very quickly start to eat up RAM and Disk space in your computer and this project was enough to stretch even a high end machine.

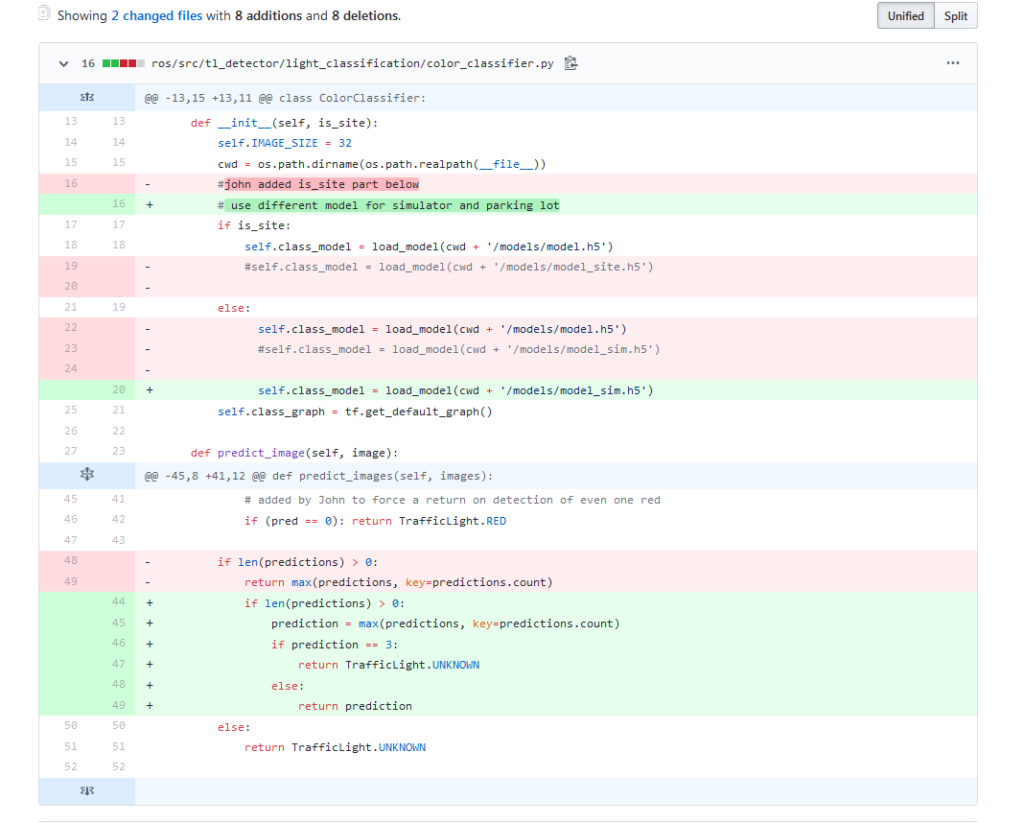

Coordinating the work between everybody was done with a special code sharing website called GitHub.com This is a picture of GitHub in action, here you can see changes in code being approved and merged with the main group of code called the master branch. One of the great things about the Udacity projects is that they encourage the use of GitHub and provide free courses on how to use it. This is something that is very popular right now in coding and using this system allows us to develop useful skills that are needed for work even though they are not directly part of a programming language.

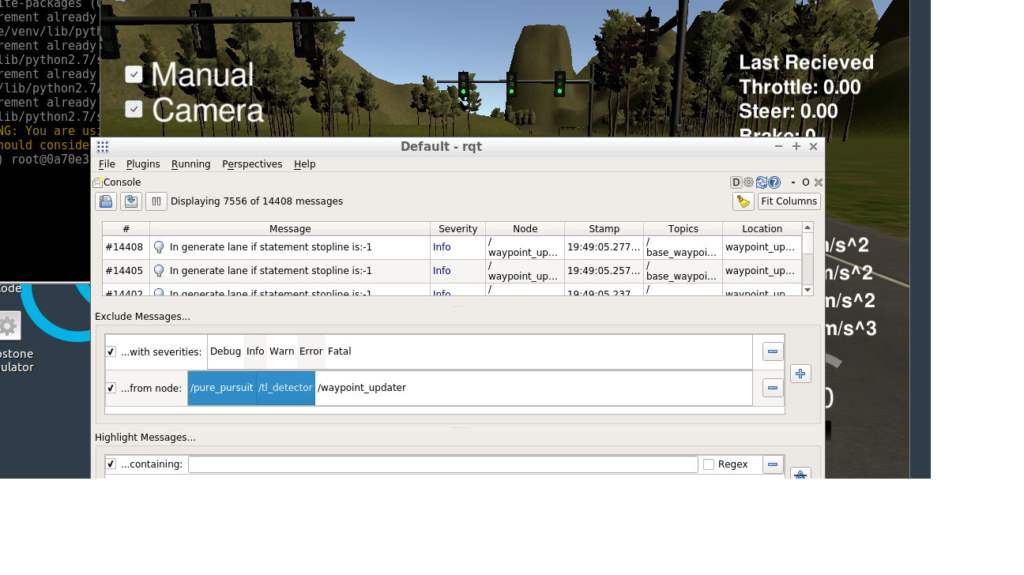

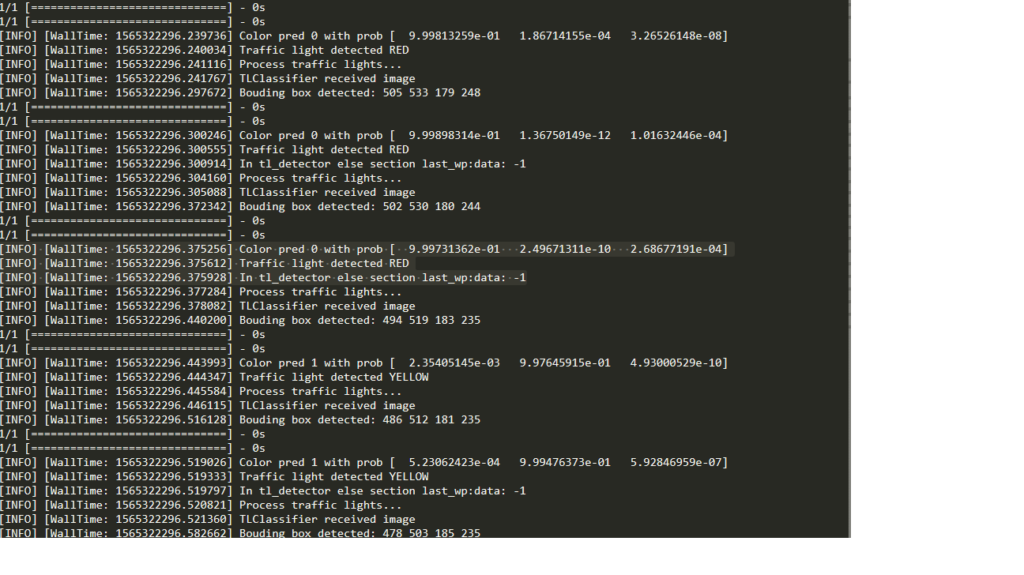

These are some images of output logs. We had some information from ROS and some we created with messages displayed to the screen. When you are trying to figure out what is going wrong you need to closely look at this kind of thing to find the problem. For example one problem I found was the car would detect and correctly classify a RED traffic light but then it might mistakenly classify a RED light as an UNKOWN light. This would throw off the decision making of the car. So we had to adjust the program to account for occasional imperfect traffic light classification and that became a major focus of the project towards the final submission.

Path Planning

PID

Particle Filter

Unscented Kalman Filter

Kalman Filter

Vehicle Detection

Behavioral Cloning

Advanced Lane Lines

Traffic Sign Classifier

Finding Lane Lines

Finally after months of team work we got to the point after a few re submissions and many hours of looking at logs and retraining classifiers and everything else we get back this video. You can see the car is slowing down before the RED light and about to stop then the light goes green in earlier attempts the car failed to recognize the Red light and motored past quite quickly so this was great. Maybe the car could have moved towards the light faster and stopped before the light changed to Green but I think that would be unrealistic , you don’t accelerate to a Red light you just gently move forward so I was happy with this.

Looking back on the whole Nano-Degree Program it really is extraordinary. The team behind it and the concepts they teach through projects are as demanding as any postgraduate course I have ever seen. The technologies involved such as Tensorflow and ROS are up to the minute and it is very difficult to find any teaching material in this niche never mind to this level. I had a few objectives in mind when I started out on this path more than 20 months ago. I wanted to update my programming skills first and foremost. With the prerequisite courses in Python and C++ I was able to do get to where many undergraduate college courses might take you but the Nano-Degree is easily postgraduate level. I learned things in this course that I didn’t know existed! Machine Learning, object detection and image classification are truly amazing topics to learn about but also many projects used C++ and very advanced mathematics, I will never forget the Kalman Filter! I had done Digital Signal Processing (DSP) at Masters level and some advanced math such as Discrete Cosine Transform but that was nothing compared to the Kalman filter’s linear algebra. As for the future I did this course to take myself in a new direction and be part of the autonomous vehicle revolution that is sweeping the world. What my place in that will be remains to be seen but certainly I feel like I have learned enough to make a real contribution to companies in this space and really that’s what I was hoping for. I would absolutely recommend the course to anyone.